This Matt Taibbi interview with Tim Robbins is interesting for Robbins’s views on art and creativity. Emphases in BOLD.

Tim Robbins and the Lost Art of Finding Common Ground

The star of films like The Producer and Bull Durham opens up about two tough years of pandemic politics, and worries society is purposefully phasing out the common meeting space

Matt Taibbi

3 hr ago

After the Covid-19 crisis began, actor Tim Robbins was like everyone else in suddenly having both more time to think, and more unpleasant things to think about. Among other things, as a leader of The Actors’ Gang theater company, Robbins had to work through what living in a world of mandated long-term isolation might mean. What if people were no longer forced into contact with one another?

“I wondered,” he recalls now, “‘What happens when you eliminate the water cooler conversation?’”

Would we miss that “difficult conversation with someone who’s not one of your friends, but a coworker and a human being,” who’s “saying something that is not the way you see the world, but he’s right there and you have to hear it”? Robbins felt we might, because confronting a live human being forces people to use parts of their brain the Internet encourages them to bypass.

“When you eliminate that conversation, and everyone goes into isolation, and has their own little silos of thought, that’s incredibly dangerous for society. Because now you’re isolated to the point where you’ll no longer have any kind of discussion,” he says. Instead, he worries, “You’ll just have that little room you go into where everyone agrees with you, and we all say, ‘Fuck those other people.’”

Years later, the Oscar-winning actor known for left-liberal advocacy finds his thinking has shifted in significant ways. In part this is because the entertainment business remains mired in high-vigilance mode when it comes to pandemic restrictions, with an omerta still hovering over vaccine-related questions. Robbins himself was with the program early, which he now seems to regret. “I was guilty of everything that I came to understand was not healthy,” he says now. “I demonized people.”

However, he soon began to wonder why certain rules were being kept long after they lost real-world utility. For instance, deals struck in 2021 between studios and powerful unions like SAG-AFTRA, the Directors’ Guild of America, and Actors’ Equity barred the unvaccinated not just from working, but auditioning. This maybe, possibly made sense when the vaccines were thought to prevent transmission. But now?

“I get it. I understand the fear. I was there,” Robbins says. “But we’ve restricted people from working for too long.”

For decades Robbins occupied a unique role in American popular culture as a writer, director, and polarizing counterculture figure, like a taller, cheerier cross of Orson Welles and Peter Fonda. His acting reputation for a long time was inextricably (and unfairly, I always thought) tied to his status as a bugbear of the Gingrich/Bush Republican right. I knew without looking that Robbins had to be a central figure in Fox host Laura Ingraham’s 2006 best-selling insta-book about heathen lib entertainers who don’t know their place, Shut Up and Sing. Ingraham in fact described Robbins and former partner Susan Sarandon as “the leading stars of today’s Hollywood elite,” a compliment on the order of being called Mr. and Mrs. Satan. Ironically, Ingraham was mad at Robbins for talking about a “chill wind” of intellectual conformity that began blowing after 9/11, the same phenomenon she herself began railing against when it started to affect conservatives in recent years, and which Robbins is still criticizing now.

Though he’s won acclaim for serious films like Shawshank Redemption and Dead Man Walking, the Robbins filmography is also packed with performances where he goofs on himself by pitching in a garter belt (Bull Durham) or wearing absurd hair extensions (in Erik the Viking — I hope those were extensions)or by giving America maybe its best-ever a portrait of a Hollywood douchebag, a performance that almost had to be career-imperiling in its accuracy (The Player). Robbins always had strong views and was especially vocal during the Iraq period, but his politics never got in the way of helping deliver some of the generation’s most enjoyable films. He even had fun with his own lefty reputation when he played a PBS newscaster taking a break between pledge drives to throw down in the Anchorman brawl.

Nonetheless, he now finds himself mixed up in controversies that place him at least somewhat on the outs with the same Hollywood political culture where he was once a leading figure. Areas of contention include the mandates and the passage of AB 5, a piece of California legislation originally aimed at gig-worker corporations like Lyft and Uber that ultimately forced hundreds of businesses, including theater companies, to offer minimum wages and benefits many claim they can’t afford. It’s a repeat of a controversy from the mid-2010s, when the Actors’ Equity union beat back a lawsuit filed by the likes of Ed Harris and Ed Asner and overrode a 2-1 vote by thousands of its members, who wanted to retain the ability to play for peanuts in a city where exposure is worth more than a few extra bucks on a paycheck.

Robbins worries that in this slew of new shibboleths about everything from vaccines to regulation of cake decorators, music arrangers and theater companies, society is revealing troubling changes in its ideas about what art and creativity are for. He sees hostility to the idea of bringing people together both in the physical sense, as in opening the doors to a theater, but also in the figurative sense of making sure art and entertainment are for everyone, not just for people with correct opinions. With bookstores, museums, theaters, and even water coolers disappearing all over the country, America seems to have it in for common spaces, as if keeping people from talking to one another is someone’s intentional political goal.

“I almost feel like there are forces within our society that just want art to die,” Robbins says.

The interview you’re about to read isn’t a red-pilling story, since Robbins isn’t and won’t ever be anyone’s idea of a conservative. It is however a warning from someone with an extensive enough track record as a progressive activist that he ought at least to have earned a hearing if he now feels he has to say a few uncomfortable things. Mostly, he’s warning about a didactic meanness he senses creeping into both politics and art. This he felt especially during the Covid-19 period, when we drifted from mere health policy into a bizarre Freaks-style collective shaming reflex, stressing the moral and mental unworthiness of people who for whatever reason — there were many — refused official advice.

“I heard people saying, ‘If you didn’t take the vaccine and you get sick, you don’t have a right to a hospital bed,” he says. “It made me think about returning to a society where we care about each other. Your neighbor would be sick, and you’d bring over some soup. It didn’t matter what their politics were, you’re their fucking neighbor,” he says, shaking his head.

“I think we lost a lot of ourselves during this time.”

More below (interview edited for length and clarity):

Matt Taibbi: When Covid-19 arrived, what happened with The Actors’ Gang?

Tim Robbins: We had to shut down, obviously. We went on to a Zoom workshop kind of mode. As an organization, we decided that we weren’t going to lay anyone off or furlough anyone. A lot of arts organizations did. We kept everyone on staff and on health insurance. We found other ways to do our work online with our education programs. Then for our prison project, we started communicating by mail. We would send them packets every month with outlines of exercises they could do on their own. A lot of them would write, and send it back to us, because we wanted to keep the relationship going with the people that we were working with before the pandemic.

So that was great. It provided us an opportunity to hire more returning citizens, the ones that had done their time and were being paroled, which was another bizarre thing — you had guys that were in jail for 30 years that got out right during Covid, and went right back into isolation. Isn’t that insane? But overall, it was a difficult two years.

Matt Taibbi: As re-opening approached, what happened?

Tim Robbins: We were capable of opening last September, but there were still all of these restrictions. I had a problem with this idea of having a litmus test at the door for entry. I understood the health concerns, but I also understand that theater is a forum and it has to be open to everybody. If you start specifying reasons why people can’t be in a theater, I don’t think it’s a theater anymore. Not in the tradition of what it has always been historically, which is a forum where stories are told and disparate elements come together and figure it out.

That’s what it’s been for. People figure out their relationship with the gods, with society, with each other. But at the door, you don’t say you can’t come in, because you haven’t done this or that. I had a problem with that. So I waited until everyone could be allowed in the theater. We opened up with a show called Can’t Pay Don’t Pay in April last year.

I think a lot of theaters had a problem rebounding, because the audiences are either skittish about being in rooms with other people, or (laughs) they just don’t like theater that much anyway. The pandemic was a good excuse to not go!

But the most challenging thing has been dealing with the actors themselves, because there is this skittishness and fear, and it’s still in people. Unlike England — I was lucky enough to spend a lot of time last year in London, and they got back a lot sooner than we did. There was an attitude there, it’s that “keep calm and carry on” thing. You know, we got bombed last night, but we’re getting up today. When they reopened their theaters, they reopened them for everybody. They never excluded anybody. And when they got back to it, their West End was thriving, and continues to thrive today.

Maybe the reason we have problems is that some people are still skittish about being in a crowd with other people, but it could also be that maybe 30 to 40% of theater audiences were told they weren’t welcome. And maybe there’s something in that: when you’re told you’re not welcome, you might not necessarily want to go back.

Matt Taibbi: When you started to question these things, what was the reaction?

Tim Robbins: I totally understood it in the first year. I was compliant with everything. I locked down, I isolated, I was away from people for seven months. I bought into it. I demonized people. I was guilty of everything that I came to understand was not healthy. I was angry at people that weren’t wearing masks, and protesting about it in Orange County. Yet, a month later I was protesting for BLM in the streets with a mask on. A week after that, I kind of had to do a self-check on that. I knew there was a little bit of hypocrisy going on there.

I had a really good friend that died from it early on. I was angry. I was fearful, and I did everything I could to help stop the spread, but also I kept my eyes open and at my age, I think one of the most important things that I’ve been able to do is understand that I’m not right all the time, and I have to check myself and see where the hypocrisy lay. So I started having more questions.

Soon it’s a year on, and two years on, and people are still stuck with these restrictions despite the fact that we now know that the vaccine didn’t stop transmission and didn’t stop people from getting it. Once the CDC changes policy and says basically that both the vaccinated and the unvaccinated are capable of getting Covid, the restrictions don’t make sense anymore, particularly regarding employment.

And there were a lot of people in SAG-AFTRA and Actors’ Equity that were kept from auditioning for the past two and a half years, and really still are today. Their livelihoods are threatened. They can’t participate yet. There’s no rhyme or reason with it. I think people are holding on because there’s still a fear, but it’s too long now.

I’m not against the vaccine, I’m just of the belief that your health is determined by your relationship with your body and your mind. And if you believe that the vaccines have helped you, then all power to you. If for some reason you didn’t vaccinate and you made it through this, all power to you too. You shouldn’t be excluded from society for doing that. I am a hundred percent sure of that. I think that was a mistake. I think it was done out of fear. I forgive it, but to continue it at this point is irrational, in my opinion.

No one has stood up for people who might be immuno-compromised or couldn’t take the vaccine, or people that are just holistic and don’t take any kind of medicine at all. Or people — this is the most important one — people that have had Covid and have natural immunity.

The other thing is, where does this end? How many boosters do you have to get to remain eligible for work? How long do we extend this?

Matt Taibbi: You feel something important has been lost in the last few years. Can you elaborate?

Tim Robbins: These last few years, they’ve taught me so much, about what is right, what is wrong. There’s so much empowerment of people that feel that they are being incredibly virtuous and generous, yet are doing things that are not very kind to other people. I think we’ve lost ourselves during this time. Just a brief stroll through social media and you’ll find that out. (laughs) The internet has become like a bar that you go to, and you open the door, and everyone yells, “Fuck you! Get out!”

Matt Taibbi: (laughs) I’m vaguely familiar…

Tim Robbins: It’s amazing. It’s taught me a lot about human nature, about how easy it is for people to turn on other people, and that when people do things that are destructive to other people, they often think they’re being virtuous. It’s been that way throughout history.

That’s something I already knew as a writer. When you’re making a character, you try not to make it all black and white, good and evil. I really understood much more profoundly what happens with the turn, how people turn. You go from someone that is inclusive, altruistic, generous, empathetic, to a monster. Where you want to freeze people’s bank accounts because they disagree with you. That’s a dangerous thing. That’s a dangerous world that we’ve created. And I say ‘we,’ because I was part of that. I bought into that whole idea early on.

Matt Taibbi: There’s also been a phenomenon of bureaucratic mission creep. Could you talk about how that’s affected your industry?

Tim Robbins: Our union out here, Actors Equity, decided about five years ago to end an agreement that the union had with local theaters here that were under 99 seats. We had a thriving small theater scene in Los Angeles, and Actors Equity decided that they wanted to end that 99-seat agreement. Then they had a vote, and two-thirds of their membership voted to keep the agreement, but the AEA ended it regardless. Producers couldn’t make money off of productions. I think Actors’ Equity has a fantasy that if they close all the small theaters in Los Angeles, a bunch of mid-level theaters will rise up, and there will be more contracts…

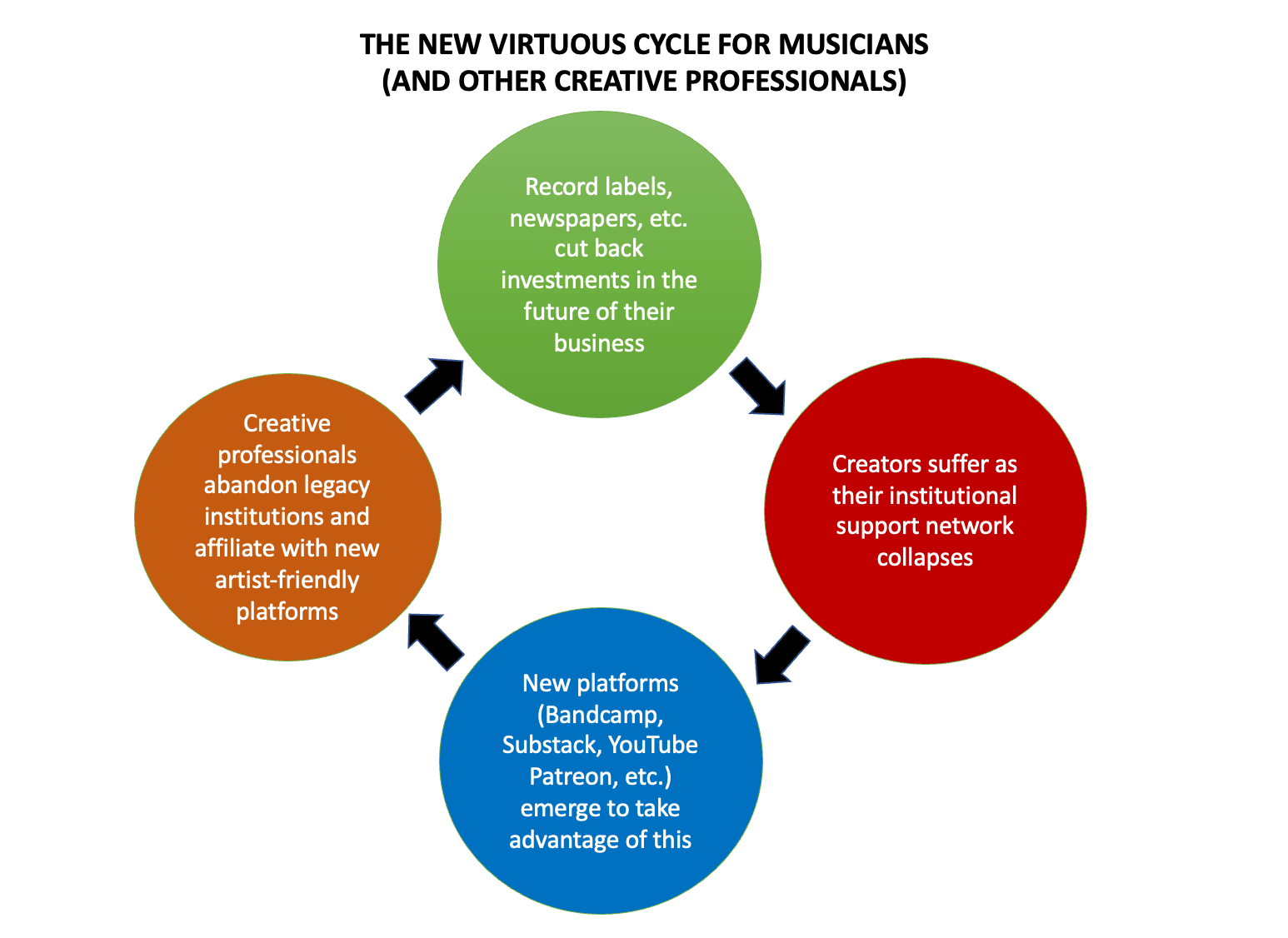

On top of that, we had a bill pass called AB 5, which was intended to target gig workers like Uber and Lyft drivers. And Uber and Lyft were wealthy enough to lobby in Sacramento to get a carve-out from that legislation. What was left were small theaters and musicians. They said, “Listen, are you going to try to keep us from rehearsing, or just jamming?”

I almost feel like there are forces within our society that just want art to die. It’s now not only just the scolds from the right, like in the old days when the Moral Majority wanted art to die. Now it’s unions and people that are, again, claiming virtuous reasons for all of this. The truth is a lot of local theater has failed, and the pandemic helped put the nail in the coffin.

Small theater companies of people who just want a way in, in a business that’s so devoid of content. If you’re lucky, you get an audition for a walk-on in a sitcom, and how’s that feeding your artistic soul? So you had a huge amount of actors in Los Angeles that just wanted to do quality work, be in a play where they could get to say real lines by real authors. It was something they were volunteering for, that would keep their instrument sharp. And now they were being told they can’t do that.

Matt Taibbi: To what end, do you think?

Tim Robbins: Listen, Matt, if you told me 20 years ago that there would be no video stores where you could talk to a clerk and see what that person might be recommending, or no record stores where you could go see what’s new in music, or no bookstores in most towns, I would’ve told you you were crazy. But we’re here. This is part of a larger movement away from the gathering place.

Theaters are failing, and movie theaters are not doing so well. Any form of gathering place other than a bar has pretty much been hurting. You know, it’s no surprise to me how well sports have been doing during this whole period. Stadiums are packed because people need community.

I’ve always thought of baseball as a place where I can go and get away from the politics and just sit and high-five some dude that might have voted for someone I don’t like. That’s important.

Matt Taibbi: Art and movies used to play that role, too, but are they being discouraged in that function?

Tim Robbins: Yes. This is the whole purpose of theater, to bring people that don’t agree into the same place where they can agree on their own shared humanity.

That’s the other problem. It got incredibly politicized here. It wasn’t that way in London. What I felt there wasn’t the divide that there was here. I attended a couple of the marches that were happening in [early] 2021, which was when they were under their lockdown. There was a street presence of people who were coming out already, against mandates and passports. I went down and I talked to some of those people and I realized: it’s not a left-right thing there. These weren’t a bunch of National Front-type people. These were old hippies and homeopaths. I tweeted about it and I got this hellish response. I realized that we have been programmed in a different way in this country, to think that if someone doesn’t get the vaccine, they must be a Nazi.

Tim Robbins: I’m trying to understand why we’re in the situation we’re in, socially, with each other. That’s what concerns me the most. I believe that if the vaccine helps you, that’s great. But, I have kind of a hard line on freedom. You can’t over-regulate people’s lives. I don’t know what that makes me, what label that puts on me, but I am an absolutist on freedom.

I’ve done a lot of work in organizing and in protest movements and in building coalitions. Community building is always about an organizer walking into the room and knowing that the people in this room do not agree on everything. But I, as an organizer, have to find the linchpin, find the common thread. And when I find that, I’m going to build the movement around that.

What I’ve been seeing over the past few years has been the opposite of that. It’s going into a room and saying, “You don’t have the right to speak because you don’t agree with our way of thinking.” Or it’s, “You’re an idiot for thinking this or that. Shut up. Get your vaccination.”

You’re not going to build any movement that way. All you’ll do is alienate people. And whether it’s organizing around social justice or criminal justice reform or creating more equity — all legitimate important things that need to be done — organizers who know how to do it don’t create division. They don’t cancel people. Because once you’ve done that, you’ve lost those people forever. You’re not getting them back.

Matt Taibbi: Don’t art and movies try to do the same thing? You’re looking for the unifying theme, the thing everyone thinks is funny, or everybody enjoys? The linchpin that holds an audience together?

Tim Robbins: Trying to find the thing that unites us. Exactly. Right. You’re trying to find something that we all can laugh at or a shared feeling that we can all have.

Dead Man Walking was a real challenge because it was a dance we had to do. I didn’t want to make the movie just for people that were against the death penalty. I wanted to make it for everybody, and I wanted people to have a discussion about it. So we had to give dignity and screen time and respect to the people that had lost their family members, and were for the death penalty. And I thought we did it in a way that was respectful enough so that people, if they did not agree with getting rid of the death penalty, could still watch that film and see their shared humanity in the pain of the mother, of the pain of Sister Helen, in the pain of the killer himself.

That’s the difficult thing to do. But when you do that, then you create dialogue. Helen will tell you that that movie changed the picture for her. Beforehand, everywhere she went, 10 or 15 people showed up. Now she’s got a thousand people coming out, and they have a discussion about the death penalty. You know, we did a play version of it, and we were in 140 universities over the course of 10 years. There would be 30 young people getting together to do a play. And when the play would open, there would be symposiums from the law department, from the divinity school, from sociology departments. They would have discussions and meetings and debates, and the actors themselves would have to play parts that they didn’t necessarily agree with, and have to go into that mindset. And it created a fertile ground for discussion and for growth. People could respect each other and both sides of the opinion.

Throwing your doors open for the public means you throw them open to everybody. And once, no one even thought twice about that. It’s the decent thing to do. Then during the pandemic, I heard people saying, “If you didn’t take the vaccine and you get sick, you don’t have a right to a hospital bed.”

And I just started thinking, “What about all the junkies?” That’s the choice they made, too. It’s their own fucking vein. Are we kicking them out? No. You take care of them.

Matt Taibbi: Smokers, obese people who have diabetes…

Tim Robbins: You save their lives. Because they’re part of us. They may be troubled and they may be having to take these drugs for whatever emotional reasons they are, but what the hell man, you gotta take care of them.

And like you say, it could be that you apply that to obesity, you could apply that to any physical malady that has anything to do with something you put in your body. Well, that’s a choice that you made. Maybe a bad choice, but don’t worry about it. We got you. And then you have the choice as to whether you want to change your life or not.

That, for me, is a functioning society.