Blog

An Algorithm for Quality

“Can you imagine how your view of the world might change if you spent your time online in a place optimized for reading what you care most about, rather than just endless scrolling? What would it feel like to check a feed that’s trying to catch you up on what you deeply value rather than keeping you feeling anxious, angry, and alone?”

This is what tuka does. Read on…

AI and Music

This is a recent post from Ted Gioia on the invasion of AI generated music. My comment below.

The Number of Songs in the World Doubled Yesterday.

I guess I’m disappointed at how this AI revolution is playing out. We were promised a new AI-constructed Bach with better music. But all we’re getting is more music.

The dam broke on the levee, and the AI songs are pouring out in torrents, threatening to wash away everything else.

Now, I can’t claim to have listened to all 100 million of these songs. But I’ve heard enough AI music to realize how lousy it is.

My Comment:

By the not-so-iron law of supply and demand, when the supply doubles the price falls approximately in half. But there is a zero bound to price and the price of creative digital content is approaching that fast. This merely means we have to stop focusing on transaction value of creative content. Instead, the value is realized in monetizing the audience reach – or peer networks in tech speak.

This post is mostly about the quality of AI generated art. No one is going to listen to a million new AI songs to find that subjective artistic quality – so how is the AI industry going to find their audience? Through AI generated recommendation engines. But just like AI can’t create music, it also can’t judge its quality. This is always going to be the “human” advantage in the digital content world.

At our digital content platform start-up, tukaGlobal, we have long recognized this reality. All this machine learning and AI cannot deliver to us the music we like. Humans, through direct human interactions do that. That’s how we all got introduced to new music in high school and college. In the digital world these connections can happen through social media dynamics. P2P networks represent the leveraging power of the human. Web3.0 will eventually deliver that world to us in a decentralized manner with blockchain. Harnessing social media dynamics will solve the search, discovery, and promotional functions of the digitized art world. Think of it this way – the most powerful algorithm in discriminating and promoting creativity is the human network. These networks are also the solution to monetization.

Get Ready to Pay More for Music

You can’t give people all the music they want for $9.99 per month.

That doesn’t generate enough cash to keep all the stakeholders happy. Somebody must get squeezed.

This is reposted from Ted Gioia’s Substack channel. He confirms the future trend we have been predicting here, especially about the streaming content business model. The bottom line is that streaming content is more expensive than buying content if artists are able to cut out the middleman. Digital technology with blockchain can do that.

Get Ready to Pay More for Music

I keep seeing cheery news stories about growth in the music business. But the numbers are misleading.

Highly misleading.

The growth is happening in all the worst places. Money is shifting from creative artists to technocrats, lawyers, and pencil pushers of all sorts.

If you play a guitar your career prospects suck. But if you’re a fund manager who owns song publishing rights, you can earn huge paychecks.

If you write songs, you may struggle to pay the rent. But if you’re a lawyer who specializes in song copyright lawsuits, you’re living in high style.

If you make music, you keep asking why it’s so tough. But if you’re a technocrat who streams music, you’re sitting pretty.

Is the music business really growing, if all the excess cash flow goes to to lawyers, fund managers, Silicon Valley overlords, and high-powered techies?

That’s like saying you’re healthy because the parasite sucking your lifeblood gets larger every day.

If you actually make the music, the industry isn’t healthy. Even if you’re just a music lover, things are looking ugly.

And it’s about to get a whole lot worse.

I’ve been warning for five years that the new economic model in the music business is broken. You can’t give people all the music they want for $9.99 per month.

That doesn’t generate enough cash to keep all the stakeholders happy. Somebody must get squeezed.

If you don’t grasp how broken the streaming model is—not just for music but also in Hollywood and other creative fields too—you won’t even understand the daily media news.

The broken economic model explains why writers in Hollywood are striking right now.

The broken economic model explains why writers are so fearful of AI—even demanding that the new contract prohibits AI-generated screenplays—and why musicians should be just as worried.

The broken economic model explains why Netflix has raised subscription prices sharply—even while reducing the range of film and TV options on its platform

The broken economic model explains why so many hot new artists are coming out of TikTok and social media, not traditional record labels.

The broken economic model explains why songwriters are selling their catalogs to investment groups.

The broken economic model explains why old music is killing new music in the marketplace.

I’ve been saying this for so long that I sound like a broken record (remember those?).

And how have things worked out for Spotify during those five years?

Check out all the red ink on the chart below. The company has never shown a profit, and results have worsened since listing on the stock exchange.

And now you’re going to pay a price for this. . . .

Physical vs. Digital Gold

I reprint the free portion of this Substack post by Ted Gioia. Emphasis in bold and my comments in red. The reliance on physical products reflects the market economics of controlling the supply and thus the price and profit margins. This is what the branding industry has flourished on. Digital content (music, blogs, books, videos) are given away virtually free in order to build an audience (peer network) that one can then sell higher margin goods and services to.

This makes perfect sense, given the economics of the digital world and how it augments the physical world. This is what Google and Facebook and LinkedIn and Apple and Amazon all do. The question then is how does the individual creator build, own, control, service, and monetize their peer networks? It’s kind of like a very valuable Rolodex file.

tuka is designed exactly for this need for users to create and monetize digital data value. It’s all about the data networks and the value they represent. The online world is slowly moving in tuka‘s direction, but to decentralize value creation will still require a blockchain platform.

……..

Half the people buying vinyl albums don’t own record players. They treat their albums like holy relics—too precious to use and merely for display among other true believers. [Yes, but that’s collecting, not listening.]

Readers were shocked when I recently reported that statistic. I was a little stunned myself. But those are the facts.

Of course, there’s a lot about the vinyl revival that defies logic. What other business relies on a 60-year-old storage technology? That would be like running my writing career with a teletype unit and mimeograph machine.

And it’s not just vinyl. Cassette tapes—a cursed format that always unraveled at the worst possible time—are hot again. Even 8-track tapes, a longtime target of ridicule and abuse, are selling for thousands of dollars.

Why are people buying this stuff?

A new research report from Andrew Thompson at Components, released earlier today, helps us understand the bigger picture. Thompson analyzed 47,703,022 Bandcamp sales—involving almost five million items. And what he learned was startling.

Success in the music business is all about selling physical objects.

The Internet supposedly killed physical music media more than two decades ago. After iTunes was launched in 2001, there was no looking back. At first the music industry pivoted to digital downloads, and then everybody in the business jumped on the streaming bandwagon.

But it’s now 2023, and streaming platforms still aren’t profitable. [They never will be unless they can find a way to monetize those user networks.] However, Bandcamp is—and now we know why.

It’s all about tangible items.

Consider this chart—which looks at the correlation between revenues on Bandcamp and an artist’s reliance on physical merchandise.

Vinyl helps drive this. But it is only just part of a larger story. Artists can sell everything from clothing to compact discs on Bandcamp. And, of course, they can sell digital tracks too.

But the numbers make clear that physical merchandise is the smart business model.

According to Andrew Thompson:

Why is Bandcamp profitable and Spotify not? The answer we arrived at was that Bandcamp provides a simple platform for complex transactions, while Spotify is a technically complicated platform for facilitating a single transaction in the form of the one-size-fits-all subscription.

AI and Creativity

This is a repost from Ted Gioia’s Substack. The use of AI for journalistic writing and editing will be a real threat to those livelihoods. Not so much for music or long-form books.

I Talk with an AI Music Historian

Maybe a chatbot can teach me a few things

Excerpt:

Ted: Okay, okay—that’s enough. Let’s try something else. You mentioned the blues earlier. If I want to learn more about the blues, where should I start?

AI Music Historian: The blues is a genre that has had a huge impact on modern music, influencing everything from rock and roll to hip-hop. Some of the most influential blues musicians of all time include:

- Robert Johnson is often regarded as the king of the Delta Blues, a style of blues that originated in the Mississippi Delta region. His music is characterized by his intricate fingerpicking style and haunting, often melancholic lyrics. Other notable Delta Blues musicians include Charley Patton, Son House, and Bukka White.

- Another important style of blues is the Chicago Blues, which emerged in the 1940s and 1950s. Some of the most famous Chicago Blues musicians include Muddy Waters, Howlin’ Wolf, and Willie Dixon. Chicago Blues is characterized by its use of amplified instruments and more band-oriented arrangements.

- Some of the earliest recorded blues musicians include W.C. Handy, who is often referred to as the father of the blues, and Blind Lemon Jefferson, who was one of the most popular blues musicians of the 1920s. . . .

Ted: Alright already—and I agree with all that. But let’s shift gears again.

Fix Social Media?

Reprinted here from the WSJ.

Yes, there will be many alternatives to mass social media that make far more sense to users. The best regulation is competition, so oversight boards can just make sure there is transparency and open competition among platforms because all platforms will attempt to create moats to protect their user data networks. The end of WEB2.0 social media dominance is is when users get paid for data contributions that enhance the value of the network. In a word, blockchain.

There’s No Quick Fix for Social Media

January 20, 2023

By Suzanne NosselSocial media is in crisis. Elon Musk’s Twitter is still afloat, but the engine is sputtering as users and advertisers consider jumping ship. The reputationally-challenged Meta, owner of Facebook and Instagram, is cutting costs to manage a stagnating core business and to fund a bet-the-farm investment in an unproven metaverse. Chinese-owned TikTok faces intensifying scrutiny on national security grounds.

Many view this moment of reckoning over social media with grim satisfaction. Advocates, politicians and activists have railed for years against the dark sides of online platforms—hate speech, vicious trolling, disinformation, bias against certain views and the incubation of extremist ideas. Social media has been blamed for everything from the demise of local news to the rise of autocracy worldwide.

Social media reflects and intensifies human nature, for good and for ill.

But the challenge of reining in what’s bad about social media has everything to do with what’s good about it. The platforms are an undeniable boon for free expression, public discourse, information sharing and human connection. Nearly three billion people use Meta’s platforms daily. The overwhelming majority log in to look at pictures and reels, to discover a news item that is generating buzz or to stay connected to more friends than they could possibly talk to regularly in real life. Human rights activists, journalists, dissidents and engaged citizens have found social media indispensable for documenting and exposing world-shaking events, mobilizing movements and holding governments accountable.

Social media reflects and intensifies human nature, for good and for ill. If you are intrigued by football, knitting or jazz, it feeds you streams of video, images and text that encourage those passions. If you are fascinated by authoritarianism or unproven medical procedures, the very same algorithms—at least when left to their own devices—steer that material your way. If people you know are rallying around a cause, you will be sent messages meant to bring you along, whether the aim is championing civil rights or overthrowing the government.

Studies show that incendiary content travels faster and farther online than more benign material and that we engage longer and harder with it. The bare algorithms thus favor vitriol and conspiracy theories over family photos and puppy videos. For the social-media companies, the clear financial incentive is to keep the ugly stuff alive.

The only viable approach, though painstaking and unsatisfying, is to mitigate harms through trial and error.

The great aim of reformers and regulators has been to figure out a way to separate what is enriching about social media from what is dangerous or destructive. [Most social media sites should enforce non-anonymity to reduce destructive behavior. At the same time they can create incentives to reward productive behaviors.] Despite years of bill-drafting by legislatures, code-writing in Silicon Valley and hand-wringing at tech conferences, no one has figured out quite how to do it.

Part of the problem is that many people come to this debate brandishing simple solutions: “free speech absolutism,” “zero tolerance,” “kill the algorithms” or “repeal Section 230” (Section 230 of the 1996 Communications Decency Act, which shields platforms from liability for users’ speech). None of these slogans offers a true or comprehensive fix, however. Mr. Musk thought he could protect free speech by dismantling content guardrails, but the resulting spike in hate and disinformation on Twitter alienated many users and advertisers. In response he has improvised frantically, resurrecting old rules and making up new ones that seem to satisfy no one.

The notion that there is a single solution to all or most of what ails social media is a fantasy. The only viable approach, though painstaking and unsatisfying, is to mitigate the harms of social media through trial and error, involving tech companies and Congress but also state governments, courts, researchers, civil-society organizations and even multilateral bodies. Experimentation is the only tenable strategy.

Well before Mr. Musk began his haphazard effort to reform Twitter, other major platforms set out to limit the harms associated with their services. In 2021, I was approached to join Meta’s Oversight Board, a now 22-person expert body charged with reviewing content decisions for Facebook and Instagram. As the CEO of PEN America, I had helped to document how unchecked disinformation and online harassment curtail free speech on social media. I knew that more had to be done to curb what some refer to as “lawful but awful” online content.

The Oversight Board is composed of scholars, journalists, activists and civic leaders and insulated from Meta’s corporate management by various financial and administrative safeguards. Critics worried that the board was just a publicity stunt, meant to shield Meta from well-warranted criticism and regulation. I was skeptical too. But I didn’t think profit-making companies could be trusted to oversee the sprawling digital town square and also knew that calling on governments around the world to micromanage online content would be treated by some as an invitation to silence dissent. I concluded that, while no panacea, the Oversight Board was worth a try.

The board remains an experiment. Its most valuable contribution has been to create a first-of-its-kind body of content-moderation jurisprudence. The board has opined on a range of hard questions. It found, for instance, that certain Facebook posts about Ethiopia’s civil war could be prohibited as incitements to violence but also decided that a Ukrainian’s post likening Russian soldiers to Nazis didn’t constitute hate speech. In each of the 37 decisions released so far, the board has issued a thorough opinion, citing international human-rights law and Meta’s own professed values. Those opinions are themselves an important step toward a comprehensible, reviewable scheme for moderating content with the public interest as a guide.

Ultimately, the value of Meta’s Oversight Board and similar mechanisms will hinge on getting social media platforms to expose their innermost workings to scrutiny and implement recommendations for lasting change. Meta deserves credit for allowing leery independent experts to nose into its business and offer critiques. Still, the board has sometimes had trouble getting its questions answered. In a step backward, the company has quietly gutted Crowdtangle, an analytics tool that the board and outside researchers have used to examine trends on the platform. The fact that Meta can close valuable windows into its operations at will underscores the need for regulation to guarantee transparency and public access to data.

Starting with a structural approach allows federal lawmakers to delay taking measures that raise First Amendment concerns.

Such openness is at the heart of two major pieces of legislation introduced in Congress last year. The Platform Accountability and Transparency Act would force platforms to disclose data to researchers, while the Digital Services Oversight and Safety Act would create a bureau at the Federal Trade Commission with broad authority and resources. But progress on the legislation has been slow, and President Biden has rightly called on Congress to get moving and begin to hold social media companies accountable.

The bills aim to address two prerequisites for promoting regulatory trial and error: ensuring access to data and building oversight capability. Only by prying open the black box of how social media operates—the workings of the algorithms and the paths and pace by which problematic content travels—can regulators do their job. As with many forms of business regulation—including for financial markets and pharmaceuticals—regulators can be effective only to the extent that they’re able to see what’s happening and get their questions answered.

Regulatory bodies also need to build muscle memory in dealing with companies. Though we have moved beyond the spectacle of the 2018 Senate hearing in which lawmakers asked Mark Zuckerberg how he made money running a free service, only trained, specialized and dedicated regulators—most of whom should be recruited from Silicon Valley—will be able to keep pace with the world’s most sophisticated engineers and coders. By starting with these structural approaches, federal lawmakers can delay entering the fraught terrain of content-specific measures that will raise First Amendment concerns.

The inherent difficulty of content-specific regulations is already apparent in laws emerging from the states. Those who believe conservative voices are unfairly suppressed by tech companies notched a victory when a split panel of the U.S. Fifth Circuit Court of Appeals upheld a Texas law barring companies from excising posts based on political ideology. The Eleventh Circuit went the opposite way, upholding a challenge to a similar law championed by Florida Gov. Ron DeSantis. The platforms have vociferously opposed both measures as infringing on their own expressive rights and leeway to run their businesses.

This term the Supreme Court is expected to adjudicate the conflicting rulings on the Texas and Florida cases. Both laws have been temporarily stayed; their effects are untested in the marketplace. Depending on what the Supreme Court decides, we may learn whether companies are willing to operate with sharply limited discretion to remove posts flagged as disinformation, hate speech or trolling. The prospect looms of a complex geographic patchwork where posts could disappear as users cross state lines or where popular platforms are off-limits in certain states because they can’t or won’t comply with local rules. If Elon Musk’s efforts at Twitter are any indication, recasting content moderation with the aim of eliminating anticonservative bias is a messy proposition.

Regulatory experiments affecting content moderation should be adopted modestly, with the aim of evaluating and tweaking them before they’re reauthorized. There have been numerous calls, for example, to repeal Section 230 and make social-media companies legally liable for their users’ posts. Doing so would force the platforms to conduct legal review of posts before they go live, a change that would eliminate the immediacy and free-flowing nature of social media as we know it.

Virtually any proposed measure can be criticized for either muzzling too much valuable speech or not curbing enough malign content.

But that doesn’t mean Section 230 should be sacrosanct. Proposals to pare back or augment Section 230 to encourage platforms to moderate harmful content deserve careful consideration. Such changes need to be assessed for their impact not just on online abuses but also on free speech. The Supreme Court will hear two cases this term on the liability of platforms for amplifying terrorist content, and the decisions could open the door to overhauling Section 230.

While the U.S. lags behind, legislative experimentation with social media regulation is proceeding apace elsewhere. A 2018 German law imposes multimillion euro fines on large platforms that fail to swiftly remove “clearly illegal” content, including hate speech. Advocates for human rights and free expression have roundly criticized the law for its chilling effect on speech.

Far more ambitious regulations will soon make themselves felt across Europe. The EU’s Digital Services Act codifies “conditional liability” for platforms hosting content that they know is unlawful. The law, which targets Silicon Valley behemoths like Meta and Google, goes into full effect in spring 2024. It will force large providers to make data accessible for researchers and to disclose how their algorithms work, how content moderation decisions are made and how advertisers target users. [Transparency] The law relies heavily on “co-regulation,” with authorities overseeing new mechanisms involving academics, advocacy groups, smaller tech firms and other digital stakeholders. The set up acknowledges that regulators lack the necessary technical savvy and expertise to act alone.

A complementary EU law, the Digital Markets Act, goes into effect this May and targets so-called “gatekeepers”—the largest online services. The measure will impose new privacy restrictions, antimonopoly and product-bundling constraints, and obligations that make it easier for users to switch apps and services, bringing their accounts and data with them.

Taken together, these measures will fundamentally reshape how major platforms operate in Europe, with powerful ripple effects globally. Critics charge that the Digital Services Act will stifle free expression, forcing platforms to act as an arm of government censorship. Detractors maintain that the Digital Markets Act will slow down product development and hamper competition.

The new EU laws illustrate the Goldilocks dilemma of social media regulation—that virtually any proposed measure can be criticized for either muzzling too much valuable speech or not curbing enough malign content; for giving social-media companies carte blanche or stifling innovation; for regulating too much or not enough. Getting the balance right will require time, detailed and credible study of the actual effects of the measures, and a readiness to adjust, amend and reconsider.

Whatever the regulatory framework, content-moderation strategies need to be alive to fast-evolving threats and to avoid refighting the last war. In 2020, efforts to combat election disinformation focused heavily on the foreign interferences that had plagued the political process four years earlier. Mostly overlooked was the power of domestic digital movements to mobilize insurrection and seek to overturn election results.

There will be no silver bullet to render the digital arena safe, hospitable and edifying. We must commit instead to fine-tuning systems as they evolve. The end result will look like any complex regulatory regime—some self-regulation, some co-regulation and some top-down regulation, with variations across jurisdictions. This approach might seem unsatisfying in the face of urgent threats to democracy, public health and our collective sanity, but it would finally put us on a path to living responsibly with social media, pitfalls and all.

Ms. Nossel is the CEO of PEN America and the author of “Dare to Speak: Defending Free Speech for All.”

Life in the Metaverse

This article on the efforts of Big Tech, specifically META, to corner the market in VR exposes some of the myths of Web3.0. Zuckerberg’s concept of VR is Web2.0 on steroids, as Jaron Lanier makes clear.

From Tablet:

Life in the Metaverse

Excerpt:

“What’s going on today with a company like Google or Meta is that they’re getting all this data for free from people who don’t understand the meaning or the value of the data and then turning it into these algorithms that are mostly used to manipulate the same people,” Lanier told Kara Swisher on “The Metaverse: Expectations vs. Reality,” a New York Times podcast. For Lanier, the ultimate point of the Quest Pro is not to provide users with a valuable experience but to popularize a tool that can collect their data at the source.

The Future Will Soon Be Here

I repost Ted Gioia’s Substack post here because I couldn’t have said it all better myself. As he outlines it, this has been tuka‘s approach all along. The one thing he doesn’t address is the winner-take-all aspect of creative markets – why all these YT revenues end up going to a few mass influencers or curators. Blockchain and tokenization are necessary tools to decentralize and distribute rewards for value created.

A Creator-Driven Culture is Coming—and Nobody Can Stop It

By Ted Gioia

“Victory is assured!”

I’m talking about victory for creative professionals—musicians, writers, visual artists, and others who have been squeezed by the digital economy.

You’re probably surprised. Some people think I’m the Dr. Doom of the music scene. And it’s true, I’ve made a lot of depressing predictions over the last few years, Even more depressing, many of these predictions have already come true.

I’ve told horror stories about musicians who lost their gigs during the pandemic, and also saw their music royalties collapse as the audience shifted to streaming. I’ve talked about journalists fired from downsizing newspapers. And filmmakers who can’t get funded to make a movie unless a Marvel superhero is named in the title .

But now I want to tell you the rest of the story. Because the next phase in the cycle is filled with good news.

Victory is assured.

Let’s start by looking at the music business, where the squeeze has been the worst.

Whenever I do a forecast, my first step is to follow the money. And the adage that money talks has never been truer than right now. Those dollars are telling an amazing and unexpected story. Word on the street is that record labels are offering far more attractive terms to musicians than ever before.

“Here’s my craziest prediction. In the future, single individuals will have more impact in launching new artists than major record labels or streaming platforms.”

In the old days, musicians were lucky to get 15% of revenues, but I’m now hearing increasingly about deals that give artists a 50% cut, and in some instances allow them to regain ownership of their master recordings at a future date. The music moguls are positively generous—and (as we shall see) for structural reasons in the business that aren’t going away.

And it’s not just major labels giving more money to musicians. Take a look at Bandcamp, which lets musicians collect almost 90% of revenues from vinyl sales. And I’m hearing constantly from techies and entrepreneurs who are working on similar artist-centric business models. We are only in the early innings of this new game, but the shift is already enough to force huge concessions from legacy music companies.

Artist-friendly platforms are the future of music. And other creative pursuits as well—my own platform, Substack, is also allowing creators to keep close to 90% of revenues. This has spurred a huge talent migration from old media, and not merely for writers—you can find almost every kind of creative professional on Substack, from cartoonists to photographers.

For 25 long, hard years, creative professionals have been told that you must give things away for free on the Internet. But not anymore. Alternative economic models are not only emerging, but are propelling the fastest-growing platforms in arts and entertainment.

This is not only shaking up highbrow and popular culture, but capturing the attention of the next generation of tech visionaries—which is why, in the last year or so, I’ve been constantly approached by startups asking me to evaluate their business plans. This is unprecedented. It simply didn’t happen before the pandemic. But not only are these entrepreneurs trying to figure out what artists want, but they’re actually relying on creator wealth maximization as the focal point for their businesses.

In general, these young techies are smarter than the folks running the music business right now. (That’s a subject I want to discuss at a later date—I call it my ‘idiot nephew theory’ of the music business. But it has to wait.) Of course, many of these entrepreneurs are dreamers who will never go anywhere. That’s always the case with entrepreneurs. But some will succeed, and in a meaningful way.

In fact, it’s inevitable—and for the simple reason that the old institutions have stopped investing in the future. The new guard will take over because the old guard got weak and lazy.

Why is all this happening? Let’s go back to look at the music situation, because this helps us understand the larger picture.

Record labels are getting more generous because they don’t have a choice. They destroyed their own power base and source of influence. They stopped investing in R&D and new consumer technologies back in the 1980s. Twenty years later, they stopped manufacturing and distributing physical albums—and even when vinyl took off, they were asleep at the wheel. Over the same time period, they lost their marketing skills, trusting more in payola and influence peddling of various sorts.

But that’s just a start. Over a fifty-year period, record labels relentlessly dumbed-down their A&R departments. They shut down their recording studios, and let musicians handle that themselves—often even encouraging artists to record entire albums at home. Then they let huge streaming platforms control the relationship with consumers. At every juncture, they opted to do less and less, until they were left doing almost nothing at all.

The music industry’s unstated dream was to exit every part of the business, except cashing the checks. But reality doesn’t work that way. If you don’t add value, those checks eventually start shrinking.

The simple fact is that the legacy music business is living off the past—and will continue to do so until the copyrights expire. For a few more years, they will collect royalties on old songs, and make money on reissues and archival material. They know themselves that they have lost control of the future of music. That’s why, if they have spare cash, they use it to buy up catalogs and publishing rights of music from back in the day. Their favorite artists are dead artists.

But this is not a long-term game. It’s a death wish.

The major labels would like to own the music stars of the future, but they won’t. They would like to act greedy and put the squeeze on the next generation, but they can’t. They simply don’t have the leverage. And never will again.

And who will win if record labels lose? You think it might be the streaming platforms? Think again—because that’s not going to happen. Spotify and Apple Music are even less interested than the major labels in nurturing talent and building the careers of young artists.

Here’s my craziest prediction. In the future, single individuals will have more impact in launching new artists than major record labels or streaming platforms.

Just consider this: There are now 36 different YouTube channels with 50 million or more subscribers—and they’re often run by a single ambitious person, maybe with a little bit of support help. In fact, there are now seven YouTube channels with more than 100 million subscribers. By comparison, the New York Times only has nine million subscribers.

“How could a Substack column outbid major media outlets for new talent? But not only can it happen, it will inevitably happen.”

Most people don’t stop and think about the implications of this. But just ponder what it means when some dude sitting in a basement has ten times as much reach and influence as the New York Times.

If you run one of these channels and have any skill in identifying talent, you can launch the next generation of stars.

And not just in music. This works for everything—comedy, dance, animation, you name it.

Consider the case of MrBeast. Many of you have no idea what I’m talking about, but you need to find out—because MrBeast (or people like him) are going to change popular culture, whether we like it or not. MrBeast, for a start, runs 18 YouTube channels with more than 200 million total subscribers. He now has his videos translated into four languages: Spanish, Portuguese, Hindi, and Russian.

What does he do? I’m no expert on MrBeast, but I’m told he’s a good dude, and gives away a lot of money—huge sums, to be blunt. And he can afford to do it, because YouTube channels are low-overhead operations with enormous cash generation potential.

Oh, I forgot to mention that MrBeast is looking to raise capital from financial investors. He claims that his business is worth $1.5 billion, and may sell 10% to get $150 million to fund his future plans.

I’m not even beginning to pretend that MrBeast will use this money to get into the music business. But he might. And if he doesn’t, someone else like him will.

MrBeast has got the cash to shake up the music business—and if he doesn’t, someone like him will

I note that MrBeast only ranks number five in YouTube channel subscriptions. There are other people like him, or will be soon, and they are much better equipped to launch a new music act than any of the major labels.

That’s why musicians can make more money when the distribution model shifts from bloated record labels with huge overhead to alternative web-based platforms. I expect that deals for artists on these web channels will be more like 50/50. MrBeast is known for his generosity, but even if he wasn’t, his business model is much more flexible than anything Sony or Universal Music could ever dream of. These new platforms can afford to offer better terms to creators, and almost certainly will—because if they don’t someone else will.

This didn’t take place during the first wave of YouTube channels, because these influencers (I hate the term, but it’s appropriate in this setting) were focused on making themselves into money-making stars. But the next phase of growth for these people is brand extension, and that’s going to turn them into talent scouts.

I’m focusing on YouTube channels here, but the same story could be told about podcasters—or any other individual with a lock on an audience in the tens of millions. Consider them as the equivalent of the Ed Sullivan Show in the old days. The host of this long-running TV show didn’t have much talent himself, but it hardly mattered—Ed Sullivan was the curator who introduced America to Elvis Presley, the Beatles, and other rising stars. That kind of thing will happen again, but via a web channel or alternative platform.

These individuals can do absolutely everything a record label currently does, and do it better—they can launch new stars, get them instant visibility and gigs, generate millions of views for new songs, attract endorsement deals, etc. The few things they can’t do in-house (for example, press vinyl records) can be easily outsourced.

The same thing will happen in publishing. I’m already seeing a few of the more popular Substack writers using their huge subscriber base to launch the careers of other writers. By my calculations, this can be even more profitable and impactful than a book contract with a New York publisher—benefiting both the sponsoring writer and the new talent.

In fact, I might move in this direction myself. It’s too early, but 2-3 years from now I might start scouting out talented young writers or podcasters and feature them here in The Honest Broker. Everything depends on subscription numbers, but it’s possible that I could pay better than the New York Times or the Wall Street Journal.

At first blush, this seems impossible. How could a Substack column outbid major media outlets for new talent? But not only can it happen, it will inevitably happen. It’s the same story as in music. The old guard has stopped defending its base business, and everything is either up for grabs now—or will be very soon.

Newspapers have lost enormous power over the last twenty years. They have lost subscribers. They have lost ad revenues. They have even, in many instances, lost credibility and respect. Up until now, this has hurt writers—who depended on the newspapers for assignments and pay checks. But we are now arriving at the point where the trend reverses.

And this reversal opens up huge opportunities and income potential for smarter, nimbler operators.

I could give many other examples. What I’m describing is also true for Hollywood movie studios, book publishing, and every other field where the old guard has become arteriosclerotic and inflexible.

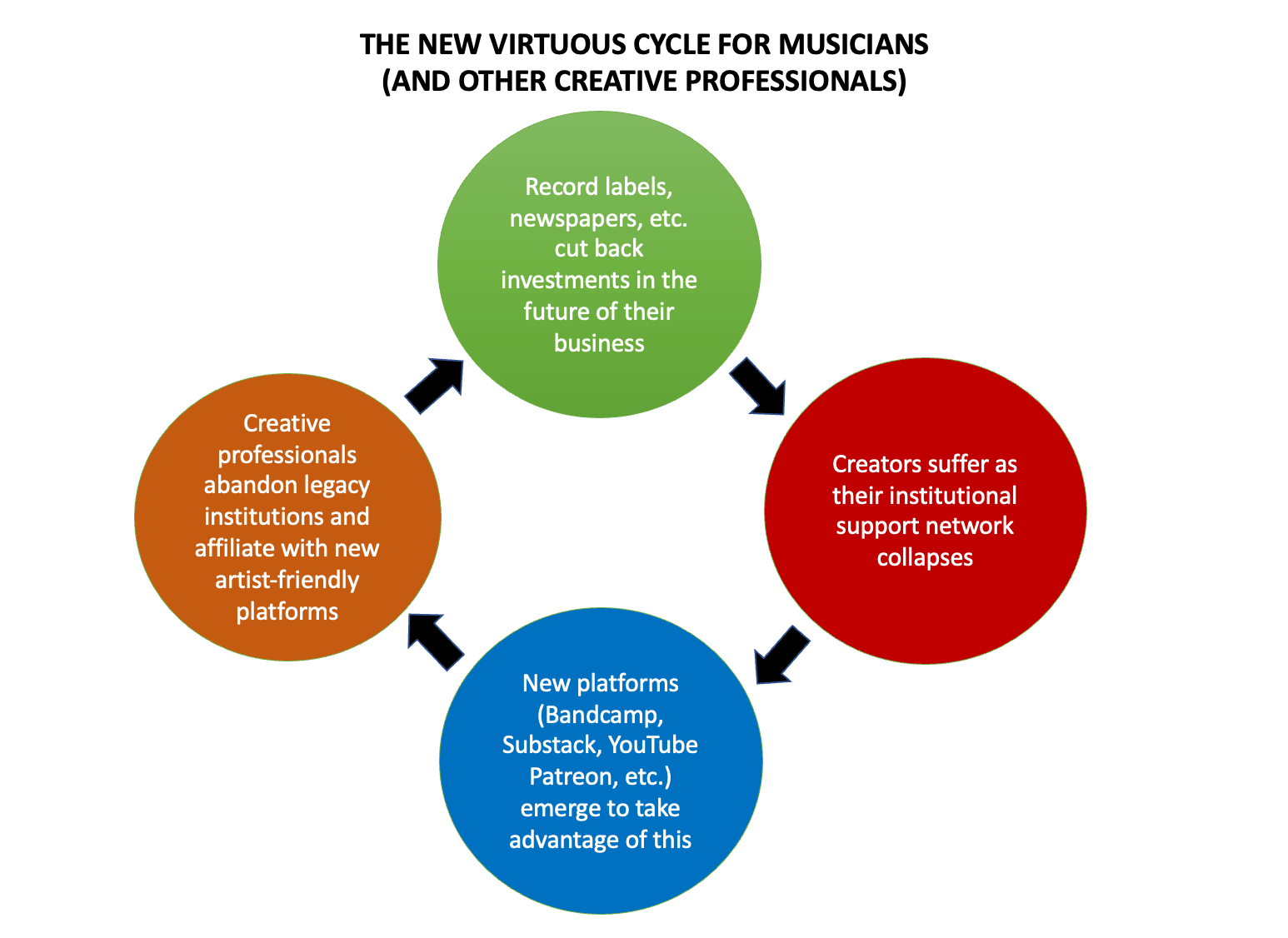

Okay, let me summarize the whole thing in a diagram.

[Note: tuka is in the blue circle here.]

[Note: tuka is in the blue circle here.]

There’s an elegant irony here. The very same forces putting the squeeze on creators actually serve to accelerate the happy next phase.

This is one reason why I believe karma is at work in the universe. If you run a business that depends on creativity, you can’t punish the creators without consequences. Sometimes it takes a while for the cycle to play out, but it always plays out the same way.

There are many aspects of this story I haven’t covered here. There are all sorts of Web3 angles, and there’s also a story to be told about how platforms such as Spotify will pay a price for squeezing musicians to subsidize their entry into podcasting and other ancillary businesses. But we can look at those on another occasion.

For the time being, I just want you to keep your eyes on the prize. And remember—Victory is Assured!

Creativity and Community

This is the whole purpose [of art], to bring people that don’t agree into the same place where they can agree on their own shared humanity.

This Matt Taibbi interview with Tim Robbins is interesting for Robbins’s views on art and creativity. Emphases in BOLD.

Tim Robbins and the Lost Art of Finding Common Ground

The star of films like The Producer and Bull Durham opens up about two tough years of pandemic politics, and worries society is purposefully phasing out the common meeting space

Matt Taibbi

3 hr ago

After the Covid-19 crisis began, actor Tim Robbins was like everyone else in suddenly having both more time to think, and more unpleasant things to think about. Among other things, as a leader of The Actors’ Gang theater company, Robbins had to work through what living in a world of mandated long-term isolation might mean. What if people were no longer forced into contact with one another?

“I wondered,” he recalls now, “‘What happens when you eliminate the water cooler conversation?’”

Would we miss that “difficult conversation with someone who’s not one of your friends, but a coworker and a human being,” who’s “saying something that is not the way you see the world, but he’s right there and you have to hear it”? Robbins felt we might, because confronting a live human being forces people to use parts of their brain the Internet encourages them to bypass.

“When you eliminate that conversation, and everyone goes into isolation, and has their own little silos of thought, that’s incredibly dangerous for society. Because now you’re isolated to the point where you’ll no longer have any kind of discussion,” he says. Instead, he worries, “You’ll just have that little room you go into where everyone agrees with you, and we all say, ‘Fuck those other people.’”

Years later, the Oscar-winning actor known for left-liberal advocacy finds his thinking has shifted in significant ways. In part this is because the entertainment business remains mired in high-vigilance mode when it comes to pandemic restrictions, with an omerta still hovering over vaccine-related questions. Robbins himself was with the program early, which he now seems to regret. “I was guilty of everything that I came to understand was not healthy,” he says now. “I demonized people.”

However, he soon began to wonder why certain rules were being kept long after they lost real-world utility. For instance, deals struck in 2021 between studios and powerful unions like SAG-AFTRA, the Directors’ Guild of America, and Actors’ Equity barred the unvaccinated not just from working, but auditioning. This maybe, possibly made sense when the vaccines were thought to prevent transmission. But now?

“I get it. I understand the fear. I was there,” Robbins says. “But we’ve restricted people from working for too long.”

For decades Robbins occupied a unique role in American popular culture as a writer, director, and polarizing counterculture figure, like a taller, cheerier cross of Orson Welles and Peter Fonda. His acting reputation for a long time was inextricably (and unfairly, I always thought) tied to his status as a bugbear of the Gingrich/Bush Republican right. I knew without looking that Robbins had to be a central figure in Fox host Laura Ingraham’s 2006 best-selling insta-book about heathen lib entertainers who don’t know their place, Shut Up and Sing. Ingraham in fact described Robbins and former partner Susan Sarandon as “the leading stars of today’s Hollywood elite,” a compliment on the order of being called Mr. and Mrs. Satan. Ironically, Ingraham was mad at Robbins for talking about a “chill wind” of intellectual conformity that began blowing after 9/11, the same phenomenon she herself began railing against when it started to affect conservatives in recent years, and which Robbins is still criticizing now.

Though he’s won acclaim for serious films like Shawshank Redemption and Dead Man Walking, the Robbins filmography is also packed with performances where he goofs on himself by pitching in a garter belt (Bull Durham) or wearing absurd hair extensions (in Erik the Viking — I hope those were extensions)or by giving America maybe its best-ever a portrait of a Hollywood douchebag, a performance that almost had to be career-imperiling in its accuracy (The Player). Robbins always had strong views and was especially vocal during the Iraq period, but his politics never got in the way of helping deliver some of the generation’s most enjoyable films. He even had fun with his own lefty reputation when he played a PBS newscaster taking a break between pledge drives to throw down in the Anchorman brawl.

Nonetheless, he now finds himself mixed up in controversies that place him at least somewhat on the outs with the same Hollywood political culture where he was once a leading figure. Areas of contention include the mandates and the passage of AB 5, a piece of California legislation originally aimed at gig-worker corporations like Lyft and Uber that ultimately forced hundreds of businesses, including theater companies, to offer minimum wages and benefits many claim they can’t afford. It’s a repeat of a controversy from the mid-2010s, when the Actors’ Equity union beat back a lawsuit filed by the likes of Ed Harris and Ed Asner and overrode a 2-1 vote by thousands of its members, who wanted to retain the ability to play for peanuts in a city where exposure is worth more than a few extra bucks on a paycheck.

Robbins worries that in this slew of new shibboleths about everything from vaccines to regulation of cake decorators, music arrangers and theater companies, society is revealing troubling changes in its ideas about what art and creativity are for. He sees hostility to the idea of bringing people together both in the physical sense, as in opening the doors to a theater, but also in the figurative sense of making sure art and entertainment are for everyone, not just for people with correct opinions. With bookstores, museums, theaters, and even water coolers disappearing all over the country, America seems to have it in for common spaces, as if keeping people from talking to one another is someone’s intentional political goal.

“I almost feel like there are forces within our society that just want art to die,” Robbins says.

The interview you’re about to read isn’t a red-pilling story, since Robbins isn’t and won’t ever be anyone’s idea of a conservative. It is however a warning from someone with an extensive enough track record as a progressive activist that he ought at least to have earned a hearing if he now feels he has to say a few uncomfortable things. Mostly, he’s warning about a didactic meanness he senses creeping into both politics and art. This he felt especially during the Covid-19 period, when we drifted from mere health policy into a bizarre Freaks-style collective shaming reflex, stressing the moral and mental unworthiness of people who for whatever reason — there were many — refused official advice.

“I heard people saying, ‘If you didn’t take the vaccine and you get sick, you don’t have a right to a hospital bed,” he says. “It made me think about returning to a society where we care about each other. Your neighbor would be sick, and you’d bring over some soup. It didn’t matter what their politics were, you’re their fucking neighbor,” he says, shaking his head.

“I think we lost a lot of ourselves during this time.”

More below (interview edited for length and clarity):

Matt Taibbi: When Covid-19 arrived, what happened with The Actors’ Gang?

Tim Robbins: We had to shut down, obviously. We went on to a Zoom workshop kind of mode. As an organization, we decided that we weren’t going to lay anyone off or furlough anyone. A lot of arts organizations did. We kept everyone on staff and on health insurance. We found other ways to do our work online with our education programs. Then for our prison project, we started communicating by mail. We would send them packets every month with outlines of exercises they could do on their own. A lot of them would write, and send it back to us, because we wanted to keep the relationship going with the people that we were working with before the pandemic.

So that was great. It provided us an opportunity to hire more returning citizens, the ones that had done their time and were being paroled, which was another bizarre thing — you had guys that were in jail for 30 years that got out right during Covid, and went right back into isolation. Isn’t that insane? But overall, it was a difficult two years.

Matt Taibbi: As re-opening approached, what happened?

Tim Robbins: We were capable of opening last September, but there were still all of these restrictions. I had a problem with this idea of having a litmus test at the door for entry. I understood the health concerns, but I also understand that theater is a forum and it has to be open to everybody. If you start specifying reasons why people can’t be in a theater, I don’t think it’s a theater anymore. Not in the tradition of what it has always been historically, which is a forum where stories are told and disparate elements come together and figure it out.

That’s what it’s been for. People figure out their relationship with the gods, with society, with each other. But at the door, you don’t say you can’t come in, because you haven’t done this or that. I had a problem with that. So I waited until everyone could be allowed in the theater. We opened up with a show called Can’t Pay Don’t Pay in April last year.

I think a lot of theaters had a problem rebounding, because the audiences are either skittish about being in rooms with other people, or (laughs) they just don’t like theater that much anyway. The pandemic was a good excuse to not go!

But the most challenging thing has been dealing with the actors themselves, because there is this skittishness and fear, and it’s still in people. Unlike England — I was lucky enough to spend a lot of time last year in London, and they got back a lot sooner than we did. There was an attitude there, it’s that “keep calm and carry on” thing. You know, we got bombed last night, but we’re getting up today. When they reopened their theaters, they reopened them for everybody. They never excluded anybody. And when they got back to it, their West End was thriving, and continues to thrive today.

Maybe the reason we have problems is that some people are still skittish about being in a crowd with other people, but it could also be that maybe 30 to 40% of theater audiences were told they weren’t welcome. And maybe there’s something in that: when you’re told you’re not welcome, you might not necessarily want to go back.

Matt Taibbi: When you started to question these things, what was the reaction?

Tim Robbins: I totally understood it in the first year. I was compliant with everything. I locked down, I isolated, I was away from people for seven months. I bought into it. I demonized people. I was guilty of everything that I came to understand was not healthy. I was angry at people that weren’t wearing masks, and protesting about it in Orange County. Yet, a month later I was protesting for BLM in the streets with a mask on. A week after that, I kind of had to do a self-check on that. I knew there was a little bit of hypocrisy going on there.

I had a really good friend that died from it early on. I was angry. I was fearful, and I did everything I could to help stop the spread, but also I kept my eyes open and at my age, I think one of the most important things that I’ve been able to do is understand that I’m not right all the time, and I have to check myself and see where the hypocrisy lay. So I started having more questions.

Soon it’s a year on, and two years on, and people are still stuck with these restrictions despite the fact that we now know that the vaccine didn’t stop transmission and didn’t stop people from getting it. Once the CDC changes policy and says basically that both the vaccinated and the unvaccinated are capable of getting Covid, the restrictions don’t make sense anymore, particularly regarding employment.

And there were a lot of people in SAG-AFTRA and Actors’ Equity that were kept from auditioning for the past two and a half years, and really still are today. Their livelihoods are threatened. They can’t participate yet. There’s no rhyme or reason with it. I think people are holding on because there’s still a fear, but it’s too long now.

I’m not against the vaccine, I’m just of the belief that your health is determined by your relationship with your body and your mind. And if you believe that the vaccines have helped you, then all power to you. If for some reason you didn’t vaccinate and you made it through this, all power to you too. You shouldn’t be excluded from society for doing that. I am a hundred percent sure of that. I think that was a mistake. I think it was done out of fear. I forgive it, but to continue it at this point is irrational, in my opinion.

No one has stood up for people who might be immuno-compromised or couldn’t take the vaccine, or people that are just holistic and don’t take any kind of medicine at all. Or people — this is the most important one — people that have had Covid and have natural immunity.

The other thing is, where does this end? How many boosters do you have to get to remain eligible for work? How long do we extend this?

Matt Taibbi: You feel something important has been lost in the last few years. Can you elaborate?

Tim Robbins: These last few years, they’ve taught me so much, about what is right, what is wrong. There’s so much empowerment of people that feel that they are being incredibly virtuous and generous, yet are doing things that are not very kind to other people. I think we’ve lost ourselves during this time. Just a brief stroll through social media and you’ll find that out. (laughs) The internet has become like a bar that you go to, and you open the door, and everyone yells, “Fuck you! Get out!”

Matt Taibbi: (laughs) I’m vaguely familiar…

Tim Robbins: It’s amazing. It’s taught me a lot about human nature, about how easy it is for people to turn on other people, and that when people do things that are destructive to other people, they often think they’re being virtuous. It’s been that way throughout history.

That’s something I already knew as a writer. When you’re making a character, you try not to make it all black and white, good and evil. I really understood much more profoundly what happens with the turn, how people turn. You go from someone that is inclusive, altruistic, generous, empathetic, to a monster. Where you want to freeze people’s bank accounts because they disagree with you. That’s a dangerous thing. That’s a dangerous world that we’ve created. And I say ‘we,’ because I was part of that. I bought into that whole idea early on.

Matt Taibbi: There’s also been a phenomenon of bureaucratic mission creep. Could you talk about how that’s affected your industry?

Tim Robbins: Our union out here, Actors Equity, decided about five years ago to end an agreement that the union had with local theaters here that were under 99 seats. We had a thriving small theater scene in Los Angeles, and Actors Equity decided that they wanted to end that 99-seat agreement. Then they had a vote, and two-thirds of their membership voted to keep the agreement, but the AEA ended it regardless. Producers couldn’t make money off of productions. I think Actors’ Equity has a fantasy that if they close all the small theaters in Los Angeles, a bunch of mid-level theaters will rise up, and there will be more contracts…

On top of that, we had a bill pass called AB 5, which was intended to target gig workers like Uber and Lyft drivers. And Uber and Lyft were wealthy enough to lobby in Sacramento to get a carve-out from that legislation. What was left were small theaters and musicians. They said, “Listen, are you going to try to keep us from rehearsing, or just jamming?”

I almost feel like there are forces within our society that just want art to die. It’s now not only just the scolds from the right, like in the old days when the Moral Majority wanted art to die. Now it’s unions and people that are, again, claiming virtuous reasons for all of this. The truth is a lot of local theater has failed, and the pandemic helped put the nail in the coffin.

Small theater companies of people who just want a way in, in a business that’s so devoid of content. If you’re lucky, you get an audition for a walk-on in a sitcom, and how’s that feeding your artistic soul? So you had a huge amount of actors in Los Angeles that just wanted to do quality work, be in a play where they could get to say real lines by real authors. It was something they were volunteering for, that would keep their instrument sharp. And now they were being told they can’t do that.

Matt Taibbi: To what end, do you think?

Tim Robbins: Listen, Matt, if you told me 20 years ago that there would be no video stores where you could talk to a clerk and see what that person might be recommending, or no record stores where you could go see what’s new in music, or no bookstores in most towns, I would’ve told you you were crazy. But we’re here. This is part of a larger movement away from the gathering place.

Theaters are failing, and movie theaters are not doing so well. Any form of gathering place other than a bar has pretty much been hurting. You know, it’s no surprise to me how well sports have been doing during this whole period. Stadiums are packed because people need community.

I’ve always thought of baseball as a place where I can go and get away from the politics and just sit and high-five some dude that might have voted for someone I don’t like. That’s important.

Matt Taibbi: Art and movies used to play that role, too, but are they being discouraged in that function?

Tim Robbins: Yes. This is the whole purpose of theater, to bring people that don’t agree into the same place where they can agree on their own shared humanity.

That’s the other problem. It got incredibly politicized here. It wasn’t that way in London. What I felt there wasn’t the divide that there was here. I attended a couple of the marches that were happening in [early] 2021, which was when they were under their lockdown. There was a street presence of people who were coming out already, against mandates and passports. I went down and I talked to some of those people and I realized: it’s not a left-right thing there. These weren’t a bunch of National Front-type people. These were old hippies and homeopaths. I tweeted about it and I got this hellish response. I realized that we have been programmed in a different way in this country, to think that if someone doesn’t get the vaccine, they must be a Nazi.

Tim Robbins: I’m trying to understand why we’re in the situation we’re in, socially, with each other. That’s what concerns me the most. I believe that if the vaccine helps you, that’s great. But, I have kind of a hard line on freedom. You can’t over-regulate people’s lives. I don’t know what that makes me, what label that puts on me, but I am an absolutist on freedom.

I’ve done a lot of work in organizing and in protest movements and in building coalitions. Community building is always about an organizer walking into the room and knowing that the people in this room do not agree on everything. But I, as an organizer, have to find the linchpin, find the common thread. And when I find that, I’m going to build the movement around that.

What I’ve been seeing over the past few years has been the opposite of that. It’s going into a room and saying, “You don’t have the right to speak because you don’t agree with our way of thinking.” Or it’s, “You’re an idiot for thinking this or that. Shut up. Get your vaccination.”

You’re not going to build any movement that way. All you’ll do is alienate people. And whether it’s organizing around social justice or criminal justice reform or creating more equity — all legitimate important things that need to be done — organizers who know how to do it don’t create division. They don’t cancel people. Because once you’ve done that, you’ve lost those people forever. You’re not getting them back.

Matt Taibbi: Don’t art and movies try to do the same thing? You’re looking for the unifying theme, the thing everyone thinks is funny, or everybody enjoys? The linchpin that holds an audience together?

Tim Robbins: Trying to find the thing that unites us. Exactly. Right. You’re trying to find something that we all can laugh at or a shared feeling that we can all have.

Dead Man Walking was a real challenge because it was a dance we had to do. I didn’t want to make the movie just for people that were against the death penalty. I wanted to make it for everybody, and I wanted people to have a discussion about it. So we had to give dignity and screen time and respect to the people that had lost their family members, and were for the death penalty. And I thought we did it in a way that was respectful enough so that people, if they did not agree with getting rid of the death penalty, could still watch that film and see their shared humanity in the pain of the mother, of the pain of Sister Helen, in the pain of the killer himself.

That’s the difficult thing to do. But when you do that, then you create dialogue. Helen will tell you that that movie changed the picture for her. Beforehand, everywhere she went, 10 or 15 people showed up. Now she’s got a thousand people coming out, and they have a discussion about the death penalty. You know, we did a play version of it, and we were in 140 universities over the course of 10 years. There would be 30 young people getting together to do a play. And when the play would open, there would be symposiums from the law department, from the divinity school, from sociology departments. They would have discussions and meetings and debates, and the actors themselves would have to play parts that they didn’t necessarily agree with, and have to go into that mindset. And it created a fertile ground for discussion and for growth. People could respect each other and both sides of the opinion.

Throwing your doors open for the public means you throw them open to everybody. And once, no one even thought twice about that. It’s the decent thing to do. Then during the pandemic, I heard people saying, “If you didn’t take the vaccine and you get sick, you don’t have a right to a hospital bed.”

And I just started thinking, “What about all the junkies?” That’s the choice they made, too. It’s their own fucking vein. Are we kicking them out? No. You take care of them.

Matt Taibbi: Smokers, obese people who have diabetes…

Tim Robbins: You save their lives. Because they’re part of us. They may be troubled and they may be having to take these drugs for whatever emotional reasons they are, but what the hell man, you gotta take care of them.

And like you say, it could be that you apply that to obesity, you could apply that to any physical malady that has anything to do with something you put in your body. Well, that’s a choice that you made. Maybe a bad choice, but don’t worry about it. We got you. And then you have the choice as to whether you want to change your life or not.

That, for me, is a functioning society.